Federated Learning: Decentralized AI Training with Privacy-Preserving Protocols

In the age of data-driven technology, the introduction of artificial intelligence (AI) is transforming everything from our businesses to our daily lives.

But alongside AI’s soaring capabilities, data privacy concerns are also rising.

According to IBM’s Cost of a Data Breach Report 2024, 94% of executives agree that preserving data privacy is a critical priority in AI development. In light of increasing privacy concerns and rising demands for privacy-preserving protocols, federated learning has evolved as a transformative approach to AI training.

Federated Learning is enabling businesses to build intelligent AI models without accessing raw data, making it a game-changer for industries where privacy is paramount, such as healthcare, finance, and multiplatform mobile app development.

It empowers secure and collaborative AI by allowing models to train on decentralized data sources, such as user devices, without compromising confidentiality.

This blog aims to delve into federated learning, understanding its working process, advantages, and key privacy-preserving protocols. We will also take a look at how federated learning is transforming industries like application development in real life.

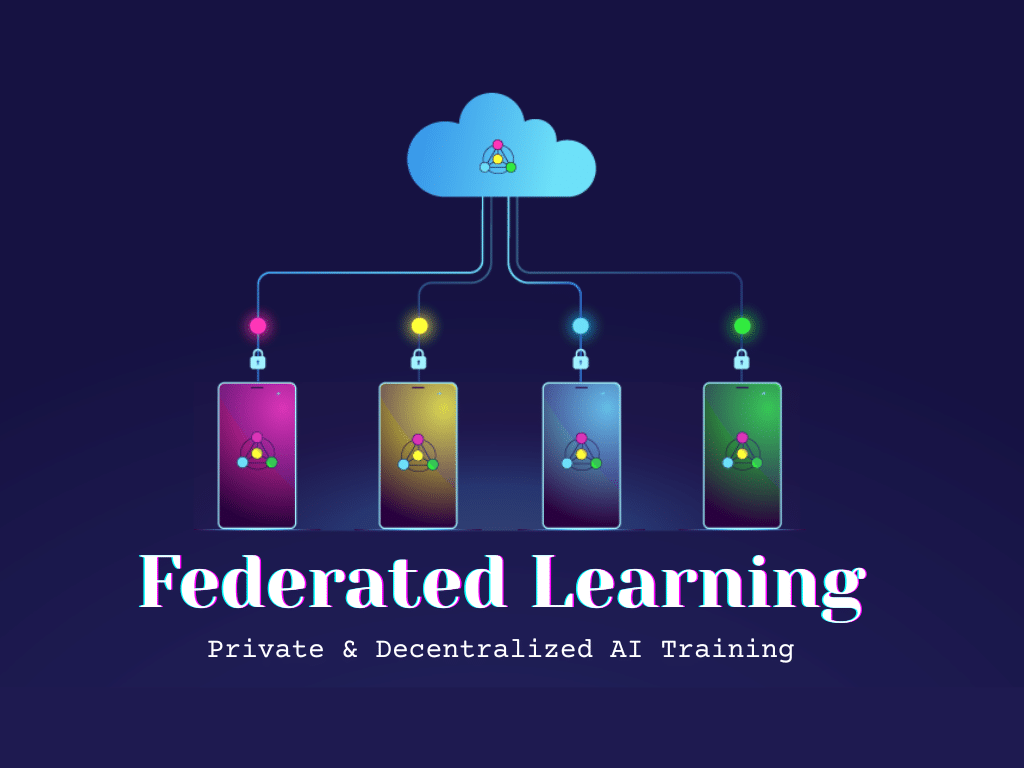

What is Federated Learning?

Federated learning is a decentralized approach to machine learning where AI models are trained collaboratively across multiple devices or servers while keeping the data localized. Instead of collecting data on a central server, each device trains the model locally and only shares the model updates with the central server.

The localized model updates are aggregated to create a global model. This approach minimizes data movement and reduces the risk of data breaches, making it ideal for organizations involved in sensitive sectors or handling user-centric applications like custom iOS app development services or eCommerce platforms.

How Federated Learning Works?

The decentralized nature of federated learning allows for collaborative training of AI models in processes like application development without the need to share raw data, thereby maintaining data privacy. This approach follows an extensive process, designed to protect privacy while ensuring effective model training at each stage.

Let’s break down the working process of federated learning by understanding the key steps it involves:

Global Model Initialization

The process of training an AI model with federated learning begins with initializing a global model on the central server and sending it to multiple devices and servers. The global model serves as the starting point for federated learning.

Local Model Training

Each device or server downloads the global model and trains it locally using its private data. For example, in the context of application development, each application uses its data (like browsing history or text input) to train the model locally.

Model Update Sharing

Once the model is trained locally, each device sends the model updates, not the data, to the central server. These updates usually include parameters such as gradients and weights, but no raw data.

Update Aggregation

After the local model updates are received from all devices, the central server aggregates the updates to refine the global model. Techniques like secure aggregation are used to ensure the updates do not reveal any sensitive information.

Global Model Update

On the completion of model aggregation, the global model is updated and sent back to the devices. This process repeats, leading to continuous model improvement while keeping data secure for industries like website development services.

Privacy-Preserving Protocols in Federated Learning

To truly preserve user privacy, federated Learning integrates multiple advanced protocols. These protocols collectively make it difficult for adversaries to reconstruct data or identify users from model updates. By using these protocols in multiplatform mobile app development, businesses can ensure robust user data privacy.

Here are four advanced privacy-preserving protocols used for decentralized AI training with federated learning:

Differential Privacy

The differential privacy technique adds statistical noise to the updates sent from local devices to the central server. Even if data is intercepted, the injected randomness makes it nearly impossible to reverse-engineer the original data.

Secure Multi-Party Computation (SMPC)

SMPC allows multiple devices or servers to compute results without revealing their individual inputs. In federated learning for application development, this protocol ensures that no single party has access to the full dataset.

Homomorphic Encryption

Homomorphic encryption enables computations on encrypted data, meaning that the server can aggregate updates without even accessing the raw or decrypted information. While computationally intensive, it ensures strong privacy preservation.

Trusted Execution Environments (TEEs)

TEEs provide isolated environments within devices for secure computation. Data processed within a TEE is protected from the rest of the system, ensuring that sensitive processes remain secure, such as multiplatform mobile app development.

Real-World Applications of Federated Learning

With the rising use of AI models across different industries, federated learning has also become the new norm of model training for businesses and developers. Organizations can now build powerful models without centralizing data, making federated learning particularly valuable for data-sensitive industries.

Keeping that in mind, let’s take a look at some notable real-world applications of federated learning for decentralized AI training with privacy preservation:

Healthcare

Federated learning is being used by hospitals collaboratively to train models to improve diagnostic accuracy without sharing sensitive patient data. This also helps in understanding medical conditions and creating treatment plans for patients.

Finance

Organizations from the finance industry use federated learning to create strong fraud detection models that are trained on distributed financial data across different institutions without sharing sensitive information about clients.

Website Development

Federated learning enables website development services to gather usage patterns for optimization without tracking individual users. This means privacy-focused metrics can still guide content strategy and design decisions.

Edge Devices and IoT

In IoT applications, edge devices (such as smart home devices and wearables) can participate in federated learning to collaboratively train AI models for tasks like anomaly detection, user behavior analysis, or energy management.

Mobile Application Development

Custom iOS app development services leverage federated learning to create robust AI models for their mobile applications. Features like personalized content feeds and predictive keyboards can be created using these models.

Autonomous Vehicles

Federated learning also supports autonomous vehicle systems by allowing vehicles to train models locally on driving data (like road conditions or driving behavior). This makes self-driving systems smarter without sharing any sensitive information.

Concluding Thoughts

Federated learning presents a promising solution for decentralized training of robust AI models while ensuring data privacy in sensitive industries like healthcare, finance, and application development. The robust working process of federated learning ensures that sensitive information remains within the safe limits of local databases.

With advanced privacy-preserving protocols like differential privacy and homomorphic encryption, federated learning effectively addresses growing concerns around data privacy. As the demand for privacy-preserving solutions continues to grow, federated learning is poised to play a pivotal role in the future of AI training.