Adversarial Attacks on AI Models and Defense Mechanisms Explained in Application Development

With its unparalleled computational capabilities, Artificial Intelligence, or AI, is taking over every industry with a storm. We are witnessing a rapid advancement of AI models and their rising potential to transform businesses globally.

However, with the increasing implementation of AI across different systems, several security threats and challenges are also emerging that require immediate attention.

One of these challenges is adversarial attacks on AI models.

These attacks deliberately attempt to exploit vulnerabilities in AI models, threatening their integrity, reliability, and security. According to a study by MIT CSAIL, adversarial attacks can reduce the accuracy of AI models by 85%.

Considering the significant negative impact of adversarial attacks on AI models, it is crucial to understand these threats and implement effective defense mechanisms.

In this blog, we will discuss more about adversarial attacks, their types, and ideal defense mechanisms against them. Moreover, we will take a look at how more secure AI models can be used in critical industries like application development.

What are Adversarial Attacks?

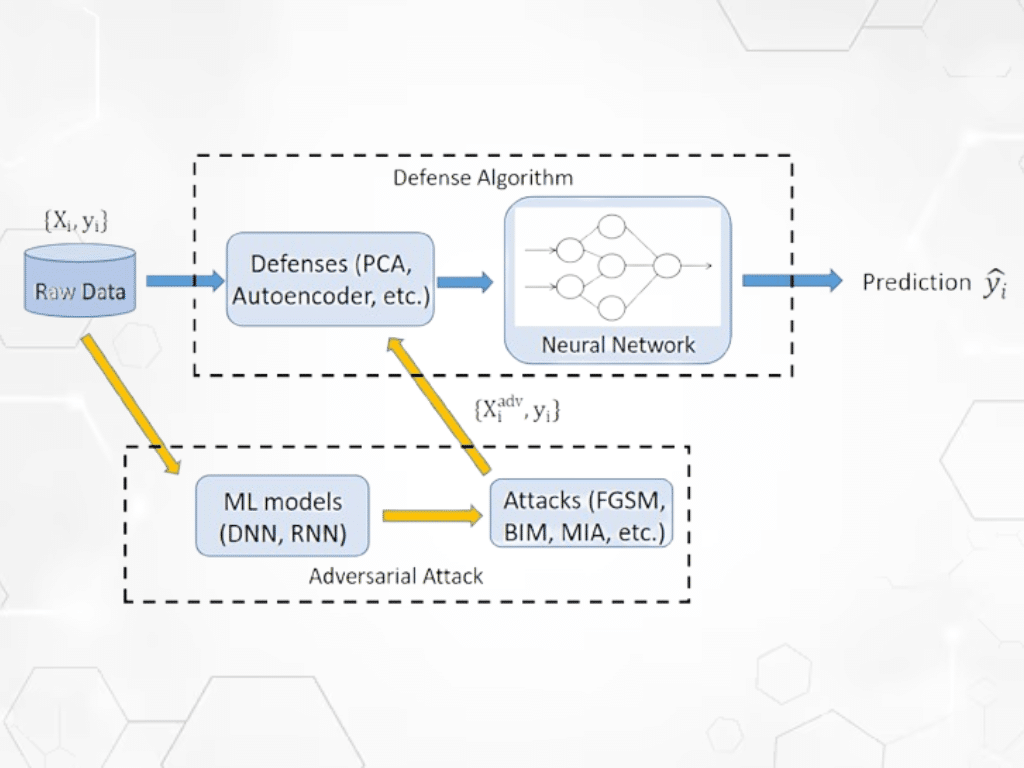

Adversarial attacks are strategic inputs intentionally designed to exploit the vulnerabilities of AI and machine learning models. These attacks make small, often imperceptible changes to the input data, causing the model to make incorrect predictions or classifications and generate inaccurate outputs.

These attacks have become a major security threat not just for AI models but also for every digital solution that relies on AI. It is the reason why professional multiplatform mobile app development companies have begun to implement robust defence mechanisms against adversarial attacks in their web and mobile applications.

Types of Adversarial Attacks on AI Models

Adversarial attacks are classified into different types based on their nature, effect, and process of implementation. Understanding these different types is crucial in developing robust mechanisms for AI models for modern digital solutions like image recognition systems, security devices, and application development.

Here are some prominent types of adversarial attacks that affect common AI models:

White-Box Attacks

White-box attacks occur when the attackers have full access to the target AI model’s parameters, architecture, and training data. These attacks are highly precise and can exploit even minor vulnerabilities. Techniques such as the Fast Gradient Sign Method (FGSM) and Projected Gradient Descent (PGD) are used for such attacks.

Black-Box Attacks

Contrary to white-box attacks, Black-box adversarial attacks occur when the attackers have no access to the model’s internal workings. The attackers rely on trial and error or transferability from a different, similar model. For such attacks, two primary strategies are employed: substitute models and query-based attacks.

Evasion Attacks

Evasion attacks generally occur during the deployment phase of an AI model. The inputs in these attacks are designed to evade detection. For example, altering a malware slightly to easily evade an AI-powered antivirus system. These attacks can be avoided in mobile apps developed with custom iOS app development services.

Poisoning Attacks

Poisoning attacks take place during the training phase of the target AI model. Attackers introduce malicious data into the training set, corrupting the learning process and embedding errors into the model. Website development services employ encryption mechanisms to defend their models against these attacks.

Model Inversion and Extraction Attacks

With model inversion and extraction attacks, the attackers attempt to reconstruct training data or steal proprietary model parameters, posing a risk to sensitive data and intellectual property. These attacks pose a high risk to AI models that are used in systems dealing with confidential data, such as financial application development.

Defense Mechanisms Against Adversarial Attacks

Adversarial attacks have been proven to threaten the accuracy and dependability of AI models considerably. In light of the growing complexity of adversarial attacks and their negative impact on AI models, several defense mechanisms have been developed for various industries, such as multiplatform mobile app development.

Let’s take a look at some of the most effective defense mechanisms widely used against adversarial attacks:

Adversarial Training

One of the most effective defense mechanisms, adversarial training involves augmenting the training data with adversarial examples so the AI model learns to recognize and resist them. As this method requires considerable computational resources, it is mostly practised by professional website development services.

Gradient Masking

Gradient masking involves hiding the gradient information of the model that can be exploited by attackers to create adversarial examples. This makes it difficult for the attackers to implement manipulative inputs. The gradients of the model can be distorted or concealed by adding noise or using non-differentiable operations.

Input Transformation

Input transformation mechanisms involve modifying the input data before processing to disrupt adversarial attacks and mitigate their impact. Essentially, this method cleans the input data, removing or reducing adversarial manipulations before they can affect the model’s output for processes like application development.

Ensemble Methods

Ensemble methods leverage the collective intelligence of multiple models to compensate for individual model weaknesses, enhancing overall system robustness. Although this method is resource-intensive, it acts as a robust layer of security in multiplatform mobile app development against adversarial attacks.

Model Hardening

Model hardening is a defense mechanism that strengthens the internal structure and training processes of AI models, enhancing their resilience against adversarial inputs. This method is especially beneficial in sectors like healthcare, finance, and custom iOS app development services, where reliability is paramount.

Building Resilient AI Models in Application Development

App development is one of the leading industries that has rapidly adopted the use of AI models for creating more robust and reliable digital solutions. With the increasing use of AI in this industry, the necessity of building more resilient models that stand strong against adversarial attacks is also rising.

Let’s take a look at some of the best practices used by professional website development services to build resilient AI models:

Penetration Testing

Penetration testing is used to simulate adversarial attacks on AI models under controlled conditions. This helps in evaluating the robustness and integrity of the model against attacks under real-life situations.

Pre-trained Defense Models

Open-source defense models designed to withstand adversarial inputs, are used by website development services. These models often come with community-tested defense layers, reducing the system’s exposure to known attack vectors.

Continuous Monitoring Systems

Adversarial attacks are dynamic and can evolve, making static defenses insufficient. Continuous monitoring helps detect anomalies in AI behavior and flags suspicious inputs in real time for critical multiplatform mobile app development projects.

Input Sanitization

Preprocessing layers can be introduced to normalize, compress, or filter inputs before feeding them into the model. Techniques like image compression or feature squeezing can reduce the effectiveness of adversarial inputs.

Adversarial Detection Algorithms

Additional detection algorithms or layers, specifically trained to detect adversarial patterns in input data, can be added to the AI models. These algorithms act as gatekeepers, blocking suspicious inputs before they reach critical systems.

Wrapping Up

As AI models continue to revolutionise multiple industries around the globe, adversarial attacks stand still as a major threat to the integrity, reliability, and performance of AI-powered systems. Different types of attacks can affect AI models differently, and understanding each one of them is significantly important.

For developers, business owners, and other stakeholders working with website development services to create digital solutions, investing in robust defense mechanisms is an imperative to safeguard AI models used in their systems. Adversarial robustness is a cornerstone for every AI-powered digital solution.